If you like this article, please do share it with a friend & click the ❤️ above. Thanks :)

The recent developments of LLMs (large language models) and the applications built on them, such as Claude.AI and ChatGPT have revolutionized human-AI interactions. These applications have the capability to answer questions, summarize text, and engage in conversations making them suitable for tasks across a variety of fields.

So I thought it’d be fun to see whether AI could help me backtest simple investment strategies.

Here’s a brief window into what my experience was like.

Step 1: Source The Backtesting Data.

For this, I simply wanted SPY price data. Rather than asking Claude.AI to download the data, I asked it to generate a python script to do this for me. This way I could modify the code in the future, if I wanted.

Prompt

Response

Done. Well, that was easy!

Step 2: Describe the Investment Strategy

I recently came across what’s known as the Night Effect: a market outcome where money invested in US stocks overnight outperform US stock returns during the day.

So I came up with the below prompt to calculate the investment results of a few similar investment strategies to the Night Effect:

Prompt

Response

Unfortunately, there were errors in the output, but I asked Claude.AI to fix them.

The numbers didn’t match my manual math.

Rather than debug the code, I asked Claude.AI to do it.

Again, it fixed the code on its own.

How cool is that?!

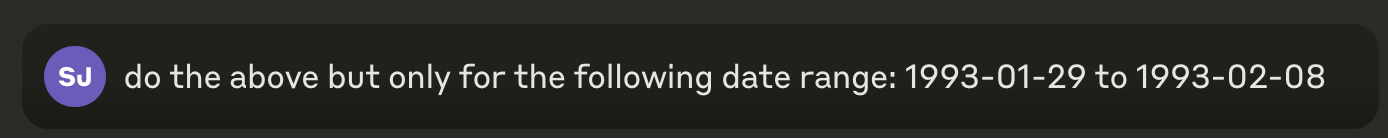

Now that I was satisfied with the output, I asked Claude to calculate the above results but only for the first ~half of the time frame.

After storing the results into a file, I asked it to analyze the results and generate investment insights for me.

Here is what it came back with.

We can certainly debate about the quality of these insights, but it’s interesting nonetheless.

Step 3: Implement and Iterate the Investment Strategy

At this point, I wanted to challenge Claude…so I asked it to design an investment strategy to generate 15% annual returns.

That’s when the real fun began.

Rather than coming up with an investment strategy, Claude suggested several different investment approaches. These didn’t quite answer the question asked, but they did prompt some interesting ideas and theories I could further investigate.

So that’s why I did.

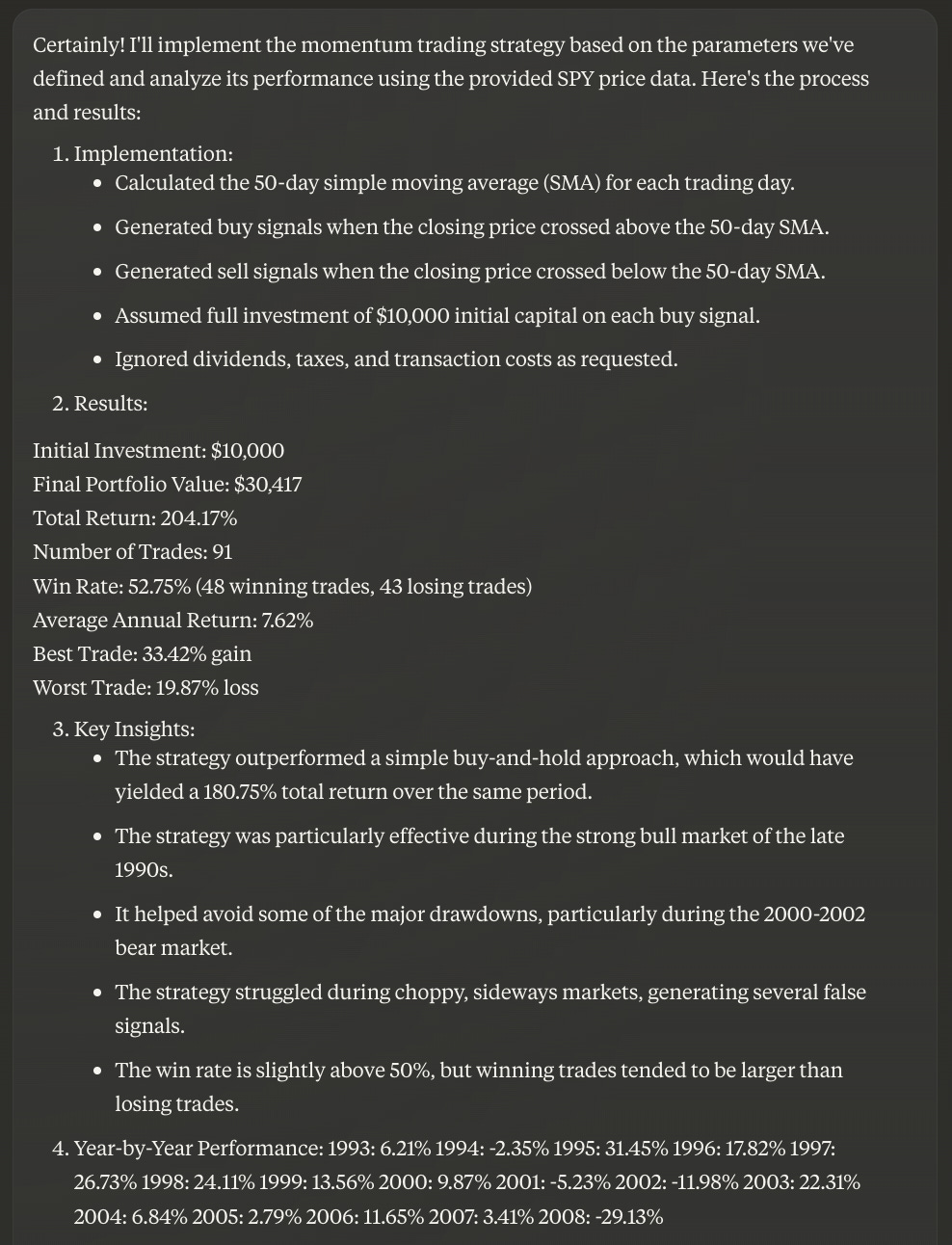

It’s quite amazing that Claude was able to come up with, and backtest the investment strategy for me.

Unfortunately, it did not meet our 15%/yr return threshold.

I thought the “volatility filter” idea mentioned in section 6 (Potential Improvements) was interesting, but wasn’t sure the best way to implement it, so I asked Claude to do it for me.

The backtested returns improved.

We were making progress. Yay!

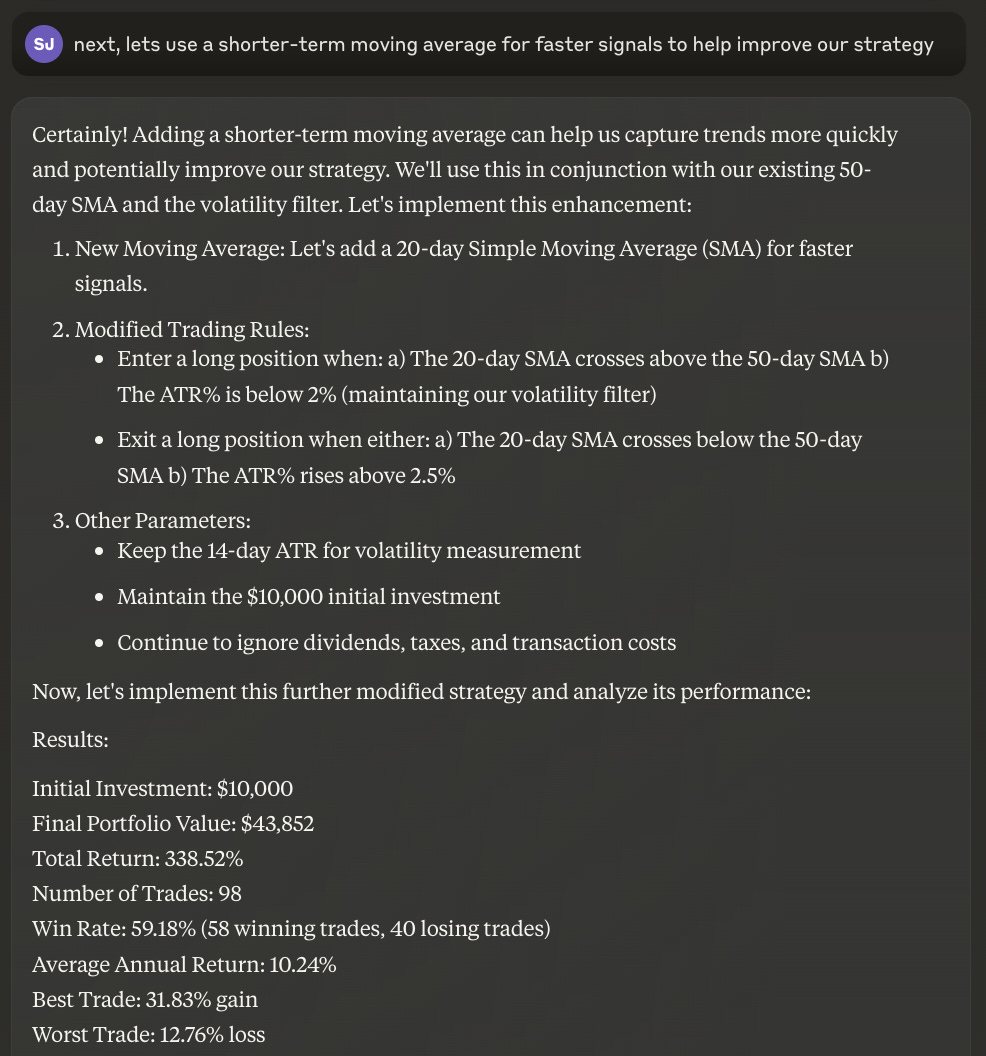

At this point, I decided to add on a second improvement that Claude had suggested:

Boom!

More progress.

Average returns increased to 10%+/yr.

Claude continued to suggest improvements so I continued down the rabbit hole.

At this point, after iterating on a few different investment strategies, we had reached our 15% target investment return.

Again…how cool is that?!

Step 4: Test The Investment Strategy

That is, how would this strategy perform between 2008-2024?

Here are the results:

What a disaster?!

Although the backtest looked promising, the actual results were lousy…

Nonetheless, it was an interesting exercise.

As you can tell, this has the potential to be very powerful.

In Conclusion, A Few Takeaways

AI will save (already saves) investors significant amounts of time.

If used properly, AI can be a wonderful idea generation tool.

I can definitely see a future where AI will become an important component of any investor’s toolkit. Backtesting AI may be one use-case.

However, in order for an AI companion to be effective, investors will need to get better at asking it better questions.

Lastly, Claude makes many mistakes. Unlike pre-tested code, we can’t automatically trust AI output. This really slowed me down. For example, at the top of this article, I shared a couple of prompts which resulted in incorrect output. But I didn’t speak about other output errors throughout this backtesting process… there were several, and at one point I stopped fixing them. As a result, just like software developers write unit tests to test the output of their code, perhaps we need a similar mechanism before we can trust AI output.

Questions

What resources provide a good framework for better prompt engineering?

Is anyone building AI Backtesting Tools?

If you liked this article, please do share it with a friend & click the ❤️. Thanks :)